By Tim Huckaby

By Tim Huckaby

Chief Technology Officer at Lucihub | AI Consultant

October 12, 2023

I have been involved in (and am an end user for) a few LLM (Large Language Model) applications since the big hype of GPT which seems like over a year ago. The power of content generation with an LLM is seemingly irresistible for many industries, let alone some software companies. Like in any hot new technology, I’m seeing implementations of LLMs in software that just do not make sense. Many are hopping on the GPT bandwagon for the sole reason of the hype. It makes sense. You can raise money on hype by claiming your application is “AI”. Raising money on hype is not my mission. The mission is to design, develop and deploy a wildly useful application and plant ourselves as thought leaders in the professional video production industry. The function of this new application is “to enable users to swiftly convert their ideas into gripping scripts, precise shot lists, and vivid visual storyboards”.

One of my self-appointed missions at Lucihub is to make sure we do not make the mistakes I am seeing so consistently in other LLM applications. At the same time, I preach to the team that we are pioneers because no one has done an application like this yet. We intend to position ourselves through our technical prowess in pioneering this LLM application. There are 4 main pillars of the application:

1. To design and build an LLM application where the user has no idea he/she is even using an LLM.

2. To build that application on a cloud platform that can handle enterprise scale with the security and reliability needed for a enterprise application.

3. Ensure the generation of quality content through complex and sophisticated prompt engineering.

4. Exceptional user interaction design in the functionality of the application so that the user can be effective in consuming and editing the content generated.

Let me go through these four pillars one by one.

Most content producing LLM applications should not be Chats

If they were, you’d simply integrate ChatGPT or Google Bard in your application with the chat API and call it good. For applications that do require content creation, simply integrating an API call to GPT is going to produce less than satisfactory results and performance. And, of course, be subject to AI hallucinations. If you are not familiar with that term, an AI hallucination is when the LLM produces bad information.

The LLM application we are building (and frankly all LLM applications) requires a contextually driven or wizard like approach in the UI where the user is guided through a templated process of selections to ensure quality output from the LLM. Sophisticated prompt engineering happens “under the hood”. In other words, a good content creating LLM app shouldn’t require the user to even think about, let alone enter, manual prompts, iterating back and forth with the LLM hoping to land on the right content. A good content creating app needs to obviate the need to have a human intermingling in a loop.

Platform Choices

We currently run a hybrid cloud suite of software where the main software suite runs on AWS and all the AI services run on Azure. Over the next few months, we’ll move everything over from AWS to Azure for a variety of reasons (which really does warrant its own article). The big reason is twofold:

1. Microsoft has proven itself as a leader in enterprise quality, scale and reliable AI services for the cloud.

2. Our product roadmap calls for AI embedded throughout the software suite. Top to bottom. Microsoft is the current leader in space race for AI services.

We have chosen to use Microsoft OpenAI instead of the company OpenAI’s native ChatGPT API or Google’s Bard. The company OpenAI has seen a number of recent data security, privacy and copyright concerns with ChatGPT. Coming a bit late to the game, Google’s Bard has its own share of issues. Microsoft’s Azure OpenAI is a tailored enterprise solution that addresses data integrity issues by isolating customer data from OpenAI's operations. In this manner, Microsoft has:

· Distanced themselves from the company OpenAI.

· Ensured enterprise trust, asserting Azure's prominence, and solidifying its leadership in AI.

· Providing autonomous solution development and data security assurance within the enterprise AI landscape.

Prompt Engineering

We have chosen Microsoft’s Semantic Kernel to orchestrate the prompt engineering process. Semantic Kernal is an open source, sophisticated prompt engineering orchestration set of services that Microsoft itself uses throughout its organization. Semantic Kernel uses natural language prompting to create and execute Semantic Kernel AI tasks across multiple languages and platforms. What Semantic Kernel does for us is structure sophisticated prompting that can be interpreted and understood by the generative AI model in Microsoft OpenAI.

User Interaction Design

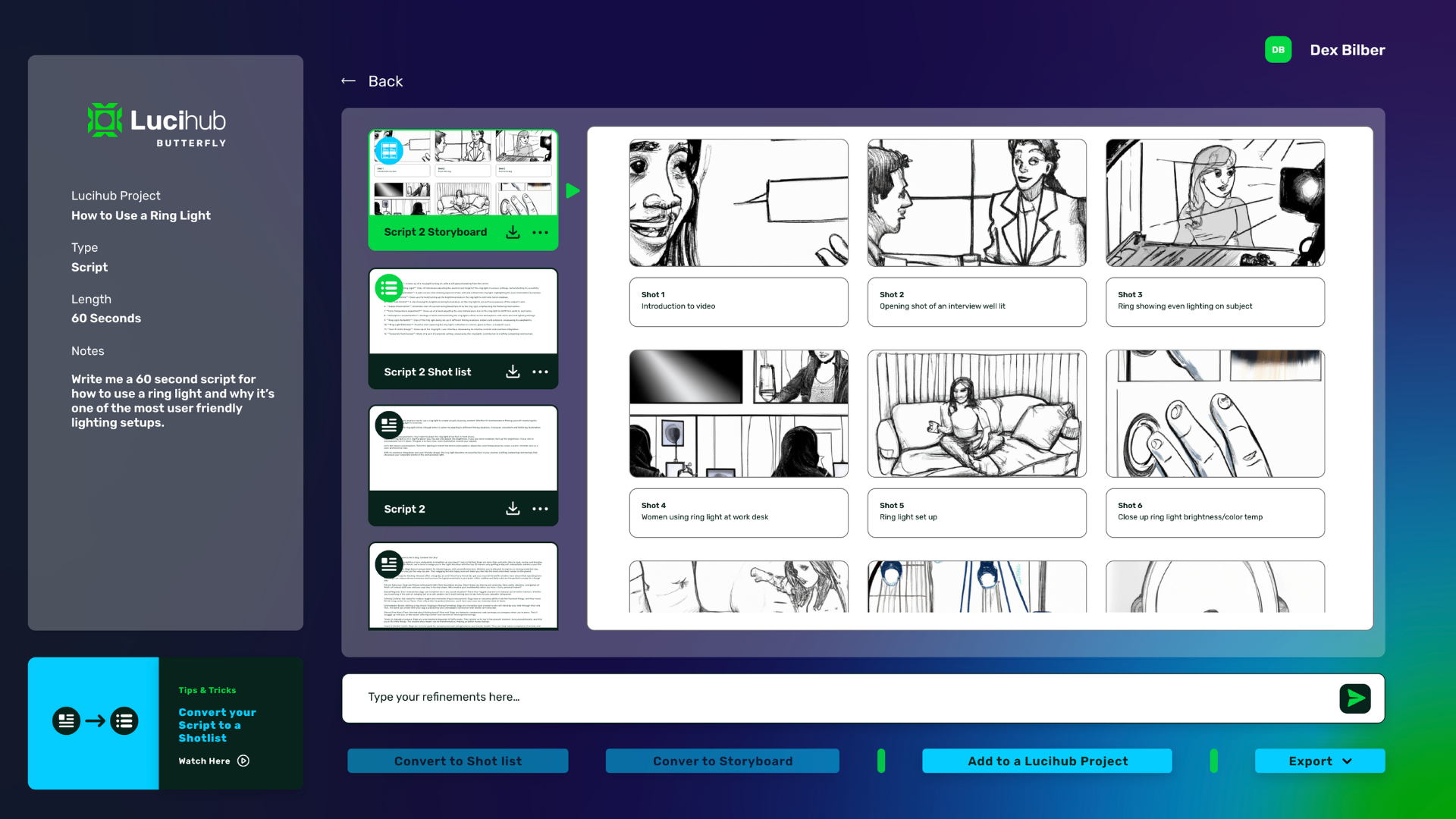

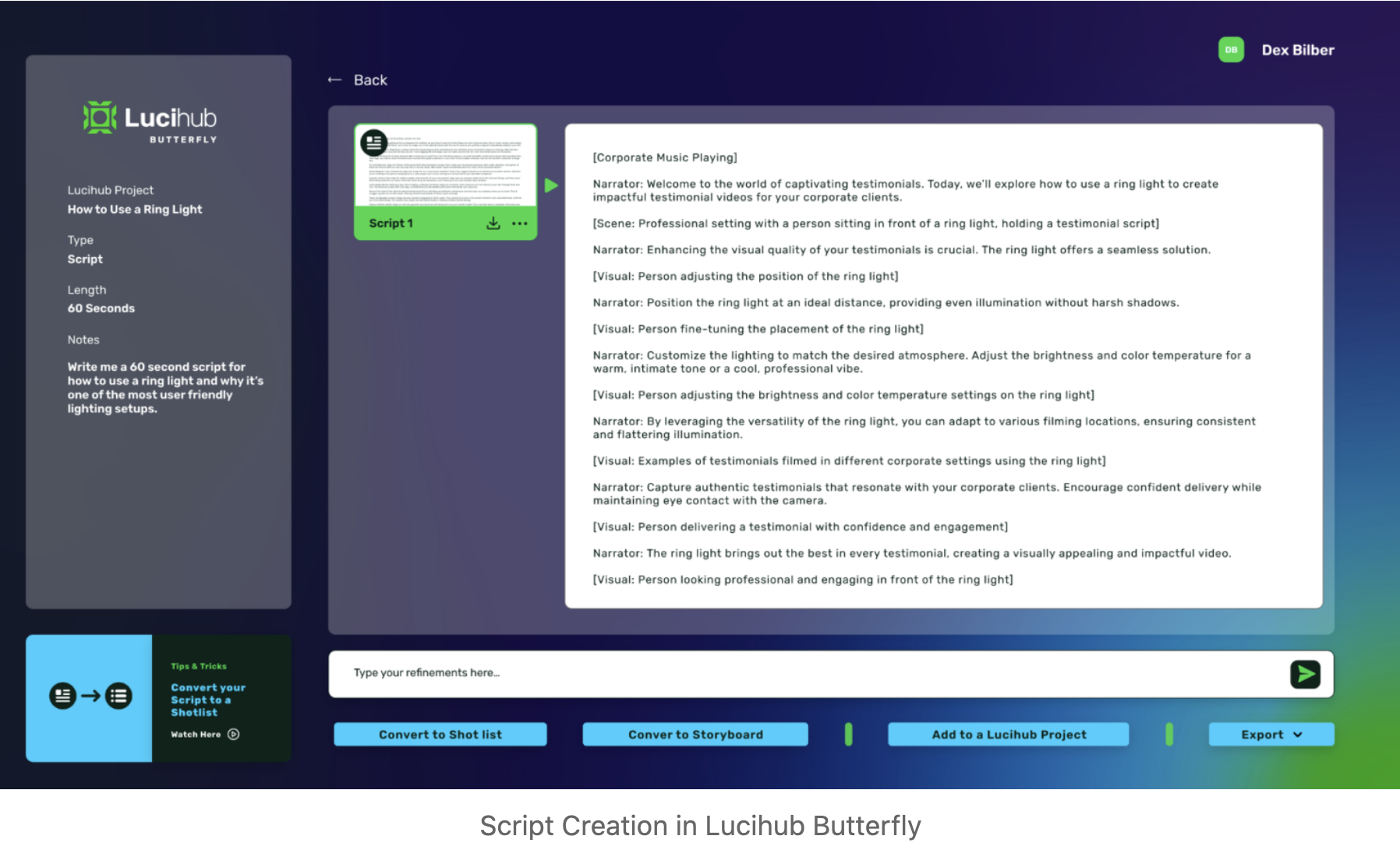

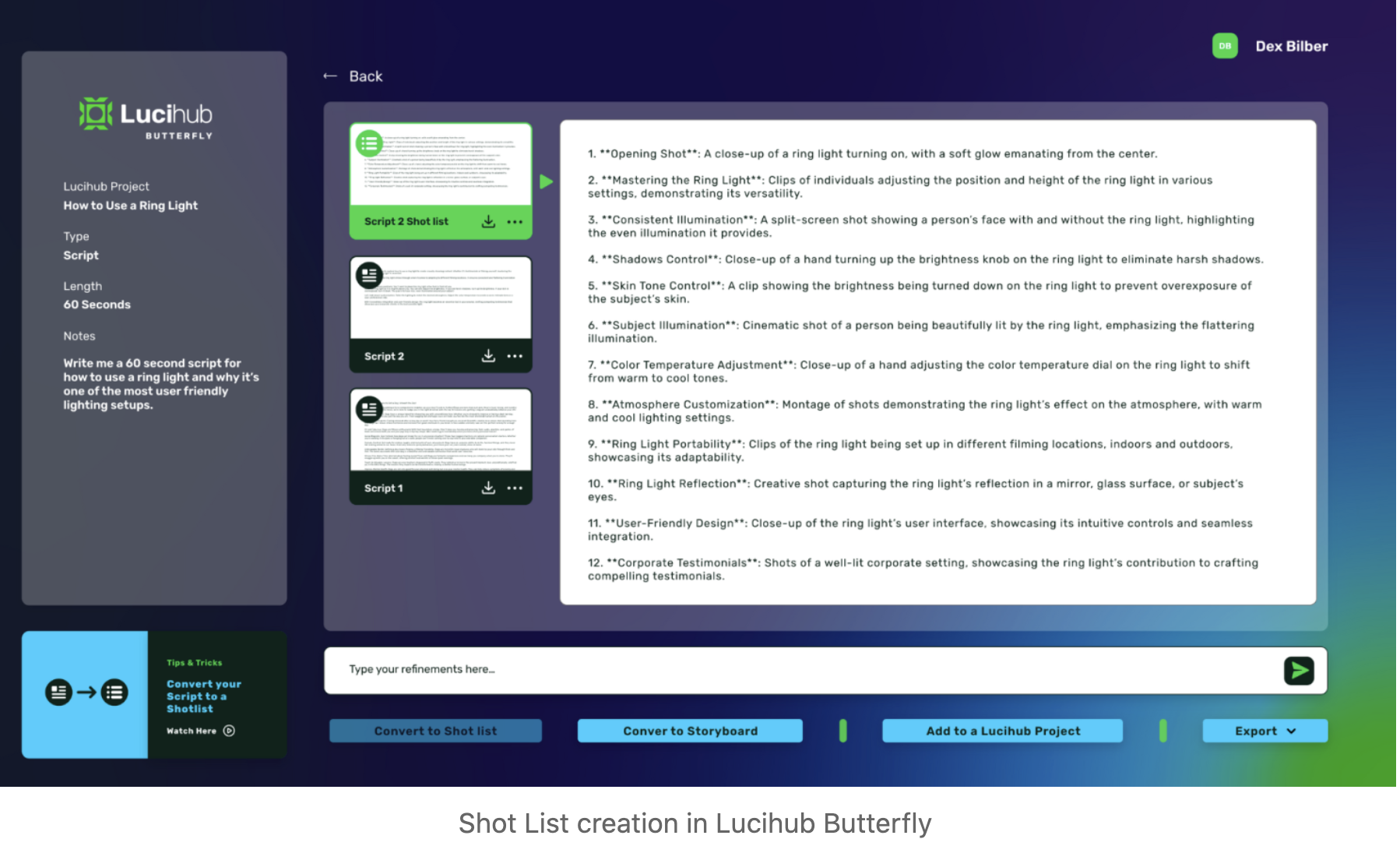

What ChatGPT lacks in UI is overcome by its raw capabilities and power. OpenAI has provided a simple user interface which produces raw content. And that is good enough for the requirements of ChatGPT. But, outstanding user interaction design has to be a design goal for us. We have to structure and format any content that the LLM produces to make it easy to read and consume. If you have ever seen a script for broadcast television or a movie you know that it is formatted making it easy to track for easy readability. We have to do this to be successful. Once a script is generated (with the ability to edit, of course) and story boards of the scenes are generated, a shot list is automatically created. A shot list is a bulleted list of raw video footage that the content creators produce. It has to be structured and formatted in a way where the content creators can use it easily as a checklist for what needs to be videoed.

Summary

This article focuses on guidance for applications that use LLMs in content creation. It explores how at Lucihub, we are using Azure OpenAI in combination with Semantic Kernel to automate the creation of the script, story boards and shot lists for a professional video project.

I have summarized the impact of LLMs on streamlining content production processes, improving efficiency, and ensuring high-quality output through sophisticated and complex prompt engineering orchestrated by Semantic Kernel. It also discusses the challenges and opportunities that arise when integrating LLMs into content creation workflows.